As the world moves towards greater energy conservation, the demand for low power consumption is increasingly for electronic equipment. This in turn, increases the importance of power supply design technology. Selection of the proper inductor is a key point in the actual power supply design.

After the ICs, the inductor is a core component of DC-DC converters, and selecting an appropriate inductor can yield high conversion efficiency. The main parameters for selecting the proper inductor include the inductance, rated current, AC resistance, and DC resistance. Concepts unique to power inductors are also contained within these parameters.

For example, power inductors have two types of rated currents, but have you ever wondered what the difference is between the two?

To answer that question, let us explain about the two, separate rated currents of power inductors.

■ Reason for two types of rated currents

There are two methods of determining the rated current of power inductors: “rated current based on self temperature rise,” and “rated current based on the rate of change in inductance value.”

Each of these methods has an separate, important meaning. “Rated current based on self temperature rise” specifies the rated current using the heat generation of the component as the index, and operation in excess of this range may damage the component or lead to set malfunction.

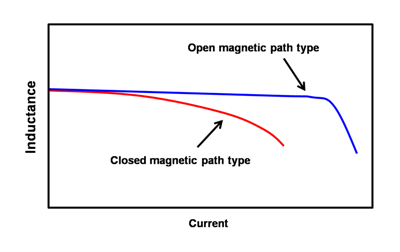

On the other hand, “rated current based on the rate of change in inductance value” specifies the rated current using the rate of decrease in the inductance value as the index. Operation in excess of this range may increase the ripple current, causing the IC control to become unstable. The tendency for magnetic saturation, i.e., the tendency for the inductance value to drop, differs according to the magnetic path structure of the inductor.